How to transfer or export SQL Server

2005 data to Excel.

Download SQL

Server interview

Test your SQL Server Skills!

|

Book Excerpt: Transferring Data to a Microsoft Excel File

This chapter shows you how to create a package that can transfer data from a

table in an SQL Server 2005 Server database to a Microsoft Excel Spreadsheet.

You will also learn how to use a Character Map Data Transformation. In the

hands-on exercise, you will be transferring data retrieved from an SQL 2005

table to MS Excel 2003 spreadsheet file. You will be using a Data Flow Task

consisting of a source connected to a SQL 2005 Server-based connection manager,

as in the previous chapter, and an Excel Destination connected to an Excel

connection manager.

|

This excerpt from

Beginners Guide to SQL Server Integration Services Using Visual Studio 2005

by Jayaram Krishnaswamy, is printed with permission from Packt

Publishing, Copyright 2007.

|

This exercise consists of the following major steps:

|

|

In order to follow the steps

as indicated, you will need a source and a destination: the source data is

extracted from the MyNorthwind database (just a renamed version of the

Northwind database) on the SQL Server 2005 Server, and the destination is

loading this to an MS Excel 2003 spreadsheet file on your hard drive. You also

need to establish a path connecting them. In addition, you will also interpose

a Character Map Data Flow Task that will convert the text in one of the data

fields, so that all characters in that column are capitalized after the

transformation.

Step 1: Create a SQL Server BI project and add a Data Flow Task

In this section, you will be creating a Business Intelligence project and

changing the name of the default package object. Since it is data-related, you

will be adding a Data Flow Task. You will also be adding a DataReader component

to the data flow.

-

Create a business intelligence project Ch 5 as described in Chapter 2 or

Chapter 3.

-

Change the default name of package from Package.dtsx to TableToXls.dtsx.

-

Drag and drop a Data Flow Task from the Toolbox onto the Control Flow page.

-

Click open the Data Flow tab, which displays the Data Flow page.

Now, you will be able to access the Data Flow Items of the Toolbox consisting

of Data Flow Sources, Data Flow Destinations, and Data Flow Transformations

(refer to Chapter 1).

-

Drag and drop a DataReader Source from the Data Flow Sources group onto the

Data Flow page.

Step 2: Configure the DataReader's Connection Manager

Configuring the DataReader source that connects to the local SQL Server 2005 has

been described in earlier chapters. Here, only a couple of the images relevant

to this chapter will be shown.

-

Right-click inside the Connection Managers page below the Canvas, and from the

drop-down choose New ADO.Net Connection….

If you are continuing with this chapter after Chapter 4, you will see the

Configure ADO.NET Connection Manager screen displaying the previously

configured connection manager. If you need to create a new one, follow the

steps shown in the previous chapter.

-

Click on the OK button in the Configure ADO.NET Connection Manager window.

A connection manager, Localhost.MyNorthwind.sa, will be added to the Connection

Manager's page.

Step 3: Set up the DataReader Source for SQL Server data

-

Right-click the DataReader Source, in the drop-down menu.

-

Choose the Edit… menu item in the drop-down menu.

This opens the Advanced Editor for DataReader Source. At first, you need to

indicate a connection manager that the DataReader can use.

-

In the Advanced Editor for DataReader Source that gets displayed click on the

Connection Managers tab.

-

Click on an empty area (in grey) below the list heading, Connection Manager.

Here, you will see the connection manager that you added in step 1.

-

Choose this Connection Manager.

-

Next, click on the Component Properties tab to open the properties of the

DataReader component.

Here, you will notice that this requires an SQLCommand (the only empty field

now).

-

Click on the ellipsis button along its side to display a text editor where you

can type in your SQLCommand.

You may also directly type-in the SQL Command:

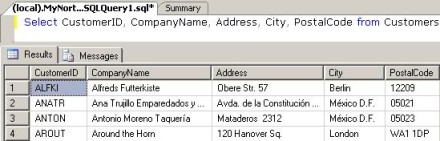

SELECT CustomerID, CompanyName, Address, City, PostalCode FROM Customers

-

Click on the Refresh button.

This query will allow the DataReader to read the data from the five columns. A

sample of the table data is shown in the following screenshot, taken from SQL

Server 2005 Management Studio. If you recall, these were the same columns that

were used in the previous chapter as well.

-

Click on the Column Mappings tab.

This will open the Column Mappings page showing the columns that are the output

of the DataReader.

In the last tab on this editor, Input and Output properties, you can add/

remove items from the External Columns, the Output Columns and the DataReader

Error output. For this tutorial, no modifications are made.

-

Click on the OK button in the above window.

This completes the configuration of the DataReader which brings five columns

from the SQL 2005 Server.

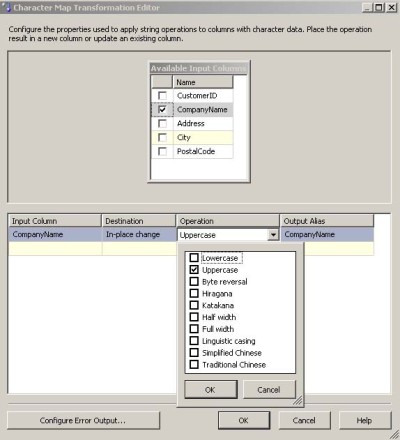

Step 4: Install a Character Map for SQL Server data transformation

The Character Map transformation is described in Chapter 1, but here you will be

experimenting with this transformation. The transformation manipulates the text

string that is coming to it and outputs the manipulated string. For example, in

the screenshot we have just seen above, the CompanyName has mixed case. Using

this transformation, we will capitalize all the characters that appear in the

CompanyName column before it is written to an Excel File—Alfreds Futterkiste

will become ALFREDS FUTTERKISTE, etc.

-

Drag and drop a Character Map data flow item from the Data Flow Transformations

Group in the Toolbox onto the Data Flow page of the Canvas.

-

Right-click on the DataReader Source and from the drop-down click on the Add

Path menu item.

-

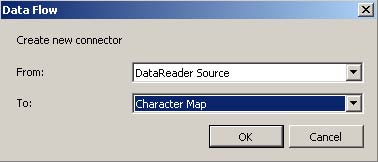

From the displayed window, Data Flow, choose Character Map for the To: field as

shown.

-

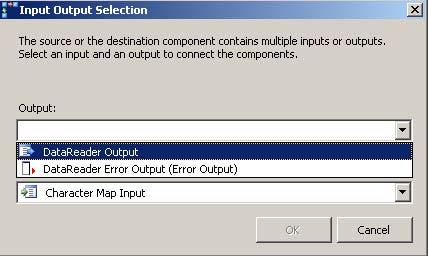

Click OK in the above window, and the following window is displayed.

Here, you need to indicate the output from this component, from the drop-down

shown.

-

Choose DataReader Output Source from the drop-down.

At present, you will not be dealing with any errors in this tutorial. When you

choose the above option, the OK button gets enabled.

-

Click on the OK button in the above window.

This establishes the path from the Data Reader Source to the Character Map data

flow component. The path is established but it still needs configuration.

-

Right-click the Character Map component and from the drop-down menu choose,

Edit.

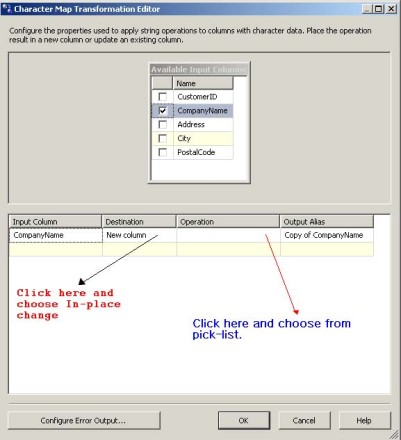

This opens the Character Map Editor, as shown in the following screenshot.

Place a check mark for the Company Name column.

If the default as shown in the above were to be chosen, then an extra column

will be added to the output. We choose the option In-place change from the

drop-down.

-

Click on the cell, New Column, under Destination in the above window. From the

drop-down choose, In-place change.

-

Click just below the Operation list-header and from the drop-down list choose

Upper Case as shown in the following screenshot.

The output alias remains the same as it is an in-line change.

-

Click on the button OK in the pick-up list and to the OK button in the

Character Map Transformation Editor.

This completes the Character Map configuration.

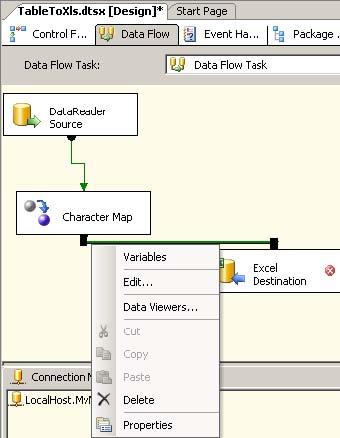

Step 5: Add an Excel destination and create path to Character Map

In this step, we will add an Excel Destination. We will then establish a path

from the Character Map to Excel Destination.

-

Add an Excel Destination component from the Data Flow Destinations group in the

toolbox to the Data Flow page.

This can be accomplished either by double-clicking the component in the Toolbox

or a drag-and-drop operation.

-

Right-click Character Map and from the drop-down menu choose Add Path.

This opens up the window, Data Flow, which allows you to establish a data flow

path, and displays the "From:" location as Character Map.

-

Click on the drop-down along "To:", which shows both the Excel Destination as

well as the DataReader Source.

-

Choose the Excel Destination and click OK to the screen.

This opens up the Input Output Selection window that shows the available output

and input windows. The Output window is empty whereas the input shows Excel

Destination Input. The path should connect from the Character Map to the Excel

Destination Input.

-

Choose the above options and click on the OK button on this screen.

You will see a green line connecting the Character Map Data Flow Component to

the Excel Destination, as shown in the next screenshot. Alternately, the

process of establishing the path can be simplified by just picking the green

dangling line from Character Map and dropping it onto the Excel Destination

object on the Data Flow page. As seen in the next screenshot, you may also edit

the path after it is created by right-clicking on this green line and choosing

the Edit… drop-down menu item.

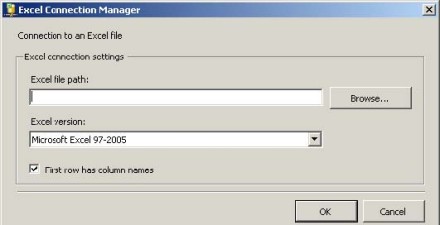

Step 6: Configure the Microsoft Excel Destination component

The data is on its way through the path, represented by the green line in the

previous step. The Excel Destination also requires a connection manager.

The Excel Destination connects to an MS Excel on your hard dive using the

connection properties defined in a connection manager.

-

Right-click the Excel Destination and from the drop-down menu choose Edit.

This displays the Excel Destination Editor. Excel requires an OLE DB connection

manager and if there are no configured connection managers (by you or a

previous user) the drop-down will be empty.

-

Click on the New… button.

The Excel Connection Manager window gets displayed as shown in the next

screenshot. Here, you need to use the Browse button and pick the Excel file as

the destination. The data will be written to the destination when the package

is run.

-

Open Windows Explorer and create an Excel file in the C:drive. For this

tutorial, TableToXls.xls is chosen.

Besides connecting to an existing file, the Excel Connection Manager supports

creating a file on the folder of your choice in the machine using the Browse…

button.

-

Now browse to the newly created file using the Browse… button and choose this

file.

-

Click on the OK button in the Excel Connection Manager window.

-

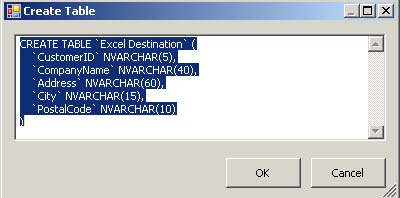

For the Data Access Mode, accept the default, Table or View.

You have to indicate the name of the Excel sheet that will be used. (Do not

click on the drop-down for locating the sheet. The drop-down will show the

three Excel sheets that are found in a newly created Excel worksheet file; all

of them having just one column each.)

-

Click on the New… button.

You are creating a new Excel sheet. This pops-up a Create Table window showing

the columns that are being piped into the component, as shown in the following

screenshot.

-

Click on the OK button, shown in the previous screenshot.

A new Excel Sheet, Excel Destination, will be added to the TableToXLS.xls file.

If you now open and review this file (TableToXls.xls) you will see the column

headers are added to this sheet.

-

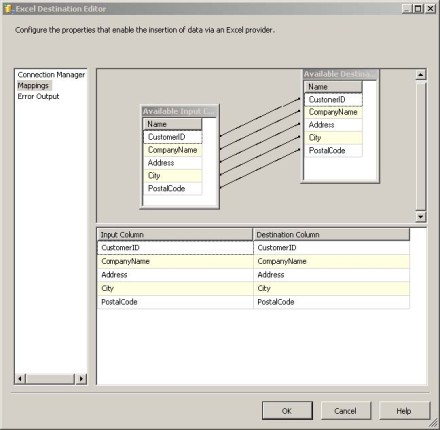

Click on the Mappings in the left-hand-side pane of the Excel Destination

Editor, which shows the mappings from the input to the output.

This shows all the columns from the Character Map Data Flow Component being

written to the destination file, as shown in the following screenshot.

-

Click on the OK button in this window. The package is now completely configured

and ready for execution.

Step 7: Test data transfer from SQL Server table to Excel

Spreadsheet

-

Right-click the TableToXls.dtsx in the Solution Explorer and from the drop-down

choose, Execute Package.

All three components on the Data Flow page turn green, indicating that the

package executed successfully without errors. You may review the Progress tab

in the 'Canvas', which shows all the details of the execution of the package.

-

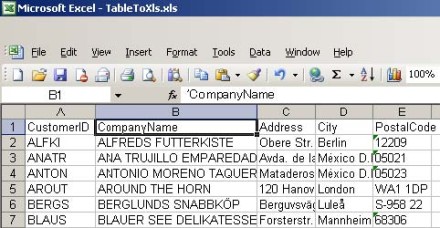

Now open up the TableToXLs.xls file and review.

A few rows of data are shown in the next screenshot. Notice that the Character

Map Data Transformation component has capitalized all the characters in the

CompanyName column.

Summary

This chapter described the following:

-

Configuring data flow components that are necessary for transferring data from

a single table in SQL Server 2005 to an Excel file.

-

The usage of a Character Map Data Transformation component.

The reverse of this transfer can be accomplished by choosing an Excel Source and

a SQL Server Destination.

Also read

Here you will learn how to copy a table on an Oracle 10G XE database to a

database on the SQL Server 2005. You will also learn how to install an Oracle

10G XE server and work with its database objects.

What is SQL Server 2005 Analysis Services (SSAS)?

What are the new features with SQL Server 2005 Analysis Services (SSAS)?

What are SQL Server Analysis Services cubes?

Explain the purpose of synchronization feature provided in Analysis Services

2005.

Explain the new features of SQL Server 2005 Analysis Services (SSAS). [Hint -

Unified Dimensional Model, Data Source View, new aggregation functions and

querying tools]....................

RAID is a mechanism of storing the same data in different locations. Since the

same data is stored, it is termed as redundant............

SQL

Server Interview questions part 2 includes following questions with

answers

Difference between DELETE and TRUNCATE commands in SQL

Server. | What are constraints in SQL Server? | What is an index? | What is

RAID? | Ways to troubleshoot performance problems in SQL Server. | Steps to

secure an SQL Server. | What is a deadlock and what is a live lock? | What is

blocking and how would you troubleshoot it? | Ways of moving data between

servers and databases in SQL Server? | Explian different types of BACKUPs

avaialabe in SQL Server? | What is database replicaion?

SQL

Server Interview questions part 3 includes following questions with

answers

What are cursors in SQL Server? | What is a join and explain different types of

joins. | What is an extended stored procedure in SQL Server? | What are

triggers in SQL Server? | What is a self join in SQL Server? | What is the

difference between UNION ALL Statement and UNION? | Write some disadvantage of

Cursor? | What is Log Shipping in SQL Server?

SQL

Server Interview questions part 4 includes following questions

with answers

What are the different types of Locks in SQL Server? | What is Extent and types

of Extent? | What is the use of DBCC commands defined in SQL Server? | Define

COLLATION in SQL Server? | Where is users names and passwords stored in SQL

Server? | What is BCP? | How can we move data along with schema of the server

object? | Define sub-query in SQL Server? | Define sp_grantlogin, sp_denylogin

and sp_revokelogin. | Write SQL query to retrieve all tables of the database.

Define distributed queries.

Describe how Linked server is used to excess external data.

Describe how OPENQUERY function is used to excess external data.

Describe how OPENROWSET and OPENDATASOURCE function is used to access external

data...............

|